The simplest way to install OpenShift on AWS is to make use of the IPI (Installer Provisioned Infrastructure) method. With IPI, the installation process will provision the necessary VPC and other infrastructure resources, and then install OpenShift onto the provisioned infrastructure. Sounds good, but to the curious (and paranoids), exactly what AWS resources were created by the installer? Let's take a look.

installation inputs

[UPDATE: 24-March-2021] - Red Hat OpenShift for AWS (ROSA) is now generally available!

[UPDATE: 18-March-2021] - This article has been updated to use OpenShift version 4.7.2

If you have a Red Hat Developer’s account (http://developers.redhat.com), just follow this link to download the OpenShift installer for AWS (IPI), as well as the pull secret → https://cloud.redhat.com/openshift/install/aws/installer-provisioned

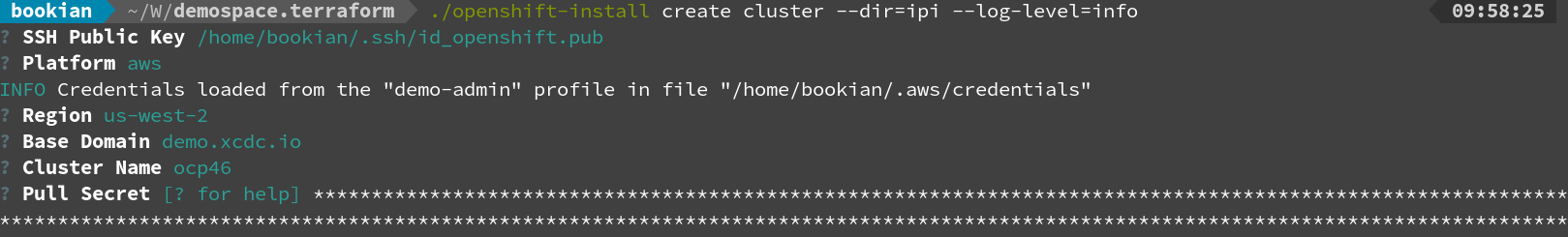

We’ll need to have the necessary AWS account (and access keys) to run the installer. We also need to prepare a SSH public key, and a Route 53 public hosted zone (the base domain). Once we have all these, we can create the cluster using

./openshift-installer create cluster --dir=<working-dir>The installer will prompt for a couple of information as shown below:

… and after around 40 minutes, you should get your OpenShift cluster.

but what actually happened?

The installation process uses terraform to provision the VPC and other AWS resources. EC2 instances with base OpenShift images are then launched to form the cluster and install the core OpenShift operators.

Post installation, you’ll see a few files created in the installation working directory (in my case ipi), including terraform.tfstate that describes the resources created by the installation process. In addition, there are also a few other IAM users being provisioned by OpenShift operators (e.g. the openshift-image-registry operator)

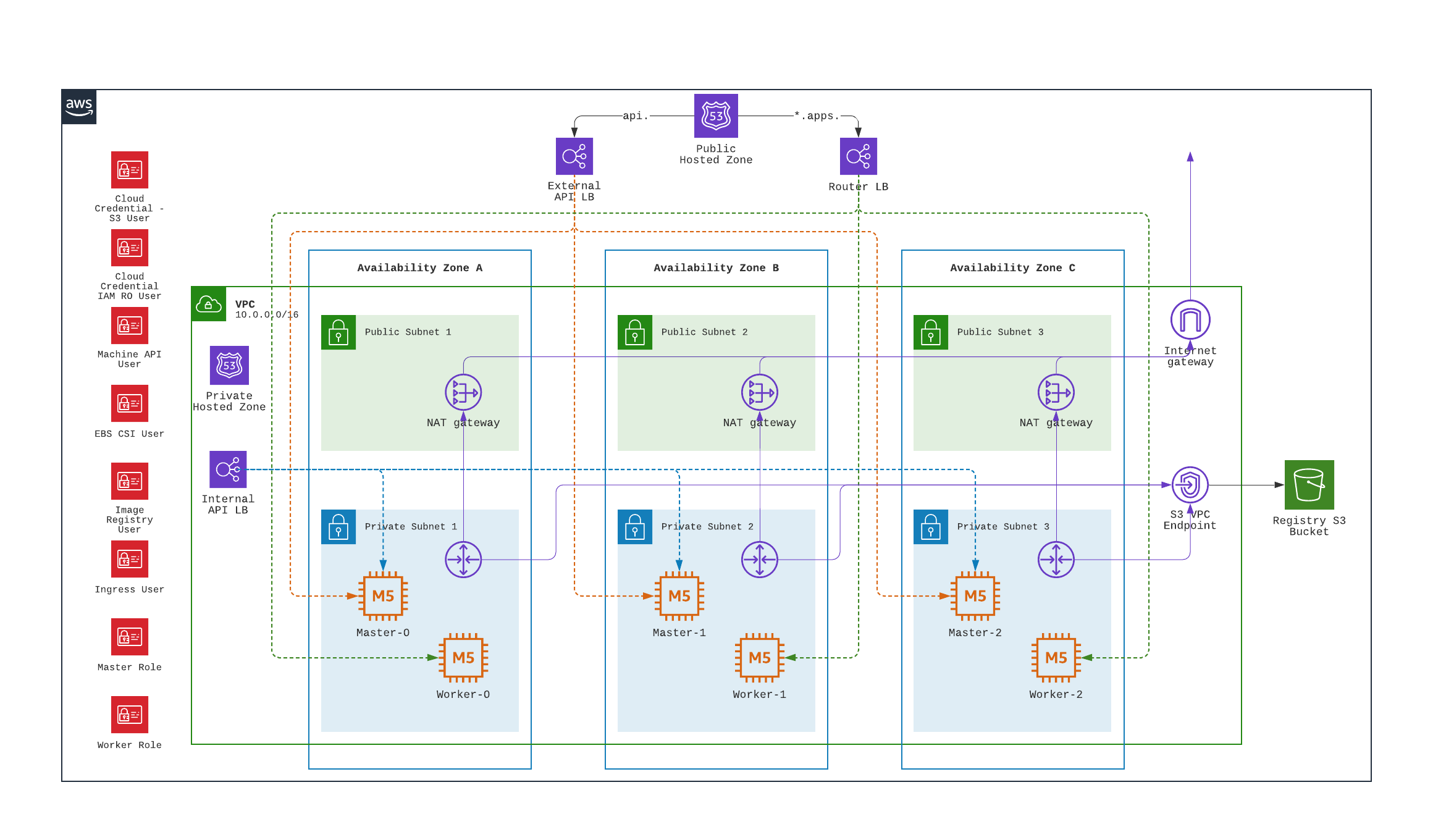

Here’s a graphical representation of the results:

users, roles

Let’s start with IAM resources (remember paranoid?). Head over to the AWS IAM console, we see the following IAM resources created:

| Name* | Type | Created/Used By |

|---|---|---|

aws-ebs-csi-driver-operator* |

User |

|

cloud-credential-operator-iam-ro |

User |

|

openshift-image-registry |

User |

|

openshift-ingress |

User |

|

openshift-machine-api-aws |

User |

|

master-role |

Role |

Installer |

worker-role |

Role |

Installer |

* there will be a prefix and suffix to the user/role names, for example `ocp46-pj42g-aws-ebs-csi-driver-operator-djdvq`

Each of the user/role above has attached inline policy that grant access to necessary AWS resources. We can use AWS IAM permission boundaries to apply more restrictive permissions if desired. Do consult Red Hat’s support if you plan to do this.

For instance, the policy for the image registry user is as follows:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:CreateBucket",

"s3:DeleteBucket",

"s3:PutBucketTagging",

"s3:GetBucketTagging",

"s3:PutBucketPublicAccessBlock",

"s3:GetBucketPublicAccessBlock",

"s3:PutEncryptionConfiguration",

"s3:GetEncryptionConfiguration",

"s3:PutLifecycleConfiguration",

"s3:GetLifecycleConfiguration",

"s3:GetBucketLocation",

"s3:ListBucket",

"s3:HeadBucket",

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject",

"s3:ListBucketMultipartUploads",

"s3:AbortMultipartUpload"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:GetUser"

],

"Resource": "<registry-user-arn>"

}

]

}We can define a permission boundary like below to restrict operations like GetObject, PutObject to only the image registry S3 bucket (instead of any buckets in the account):

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "RegistryPermBoundary",

"Effect": "Allow",

"Action": [

"s3:GetBucketPublicAccessBlock",

"s3:GetLifecycleConfiguration",

"s3:ListBucketMultipartUploads",

"s3:GetBucketTagging",

"s3:PutBucketPublicAccessBlock",

"s3:PutEncryptionConfiguration",

"s3:PutObject",

"s3:GetObject",

"s3:GetEncryptionConfiguration",

"s3:AbortMultipartUpload",

"s3:PutBucketTagging",

"s3:PutLifecycleConfiguration",

"s3:GetBucketLocation",

"s3:DeleteObject"

],

"Resource": [

"<bucket-arn>",

"<bucket-arn>/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:HeadBucket"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:GetUser"

],

"Resource": "<registry-user-arn>"

},

{

"Effect": "Deny",

"Action": "s3:DeleteBucket",

"Resource": "*"

}

]

}First create a policy from the above JSON, using

aws iam create-policy --policy-name <policy-name> --policy-document file://<path-to-policy-file>then, attach the permission boundary to the image registry user:

aws iam put-user-permissions-boundary --permissions-boundary <policy-arn> --user-name <openshift-image-registry-user-name>vpc

Next, we’ll examine the VPC resources created. The installation process creates a public and a private subnet in each availability zone of selected AWS region. All master and worker nodes are launched in the private subnets. The nodes can reach out to the Internet via the NAT gateways that are launched in the public subnet in the same AZ.

load balancers

Two separate network load balancers are provisioned to serve internal (ports 6443, 22623) and external (port 6443) API requests to the masters.

A third, classic load balancer is provisioned to serve application ingress (ports 80, 443).

dns

The installation process will create a private hosted zone for the cluster. In my case, the cluster name is ocp46 and my base domain is demo.xcdc.io, so the private hosted zone is ocp46.demo.xcdc.io. This hosted zone contains DNS entries for the internal and external API endpoints, as well as the wildcard entry for application ingress.

DNS entries are also created in the supplied public hosted zone (demo.xcdc.io in my case), to publish the dns names for API end point (api.ocp4.demo.xcdc.io), as well as the wildcard entry (*.apps.ocp4.demo.xcdc.io) to the respective load balancers above.

security groups

A security group is attached to the application ingress load balancer to allow only HTTP and HTTPS traffic.

Worker nodes security group allows network traffic from the ingress load balancers, and selected traffic from the master nodes as well as other worker nodes below.

| ports | protocols | source | description |

|---|---|---|---|

all |

all |

ingress load balancers |

ingress traffic |

all |

icmp |

vpc |

ICMP |

all |

ESP (50) |

workers, masters |

IPSec |

500,4500 |

UDP |

workers, masters |

IPSec |

22 |

tcp |

vpc |

SSH |

4789 |

udp |

workers, masters |

Vxlan packets |

6081 |

udp |

workers, masters |

GENEVE packets |

9000 - 9999 |

tcp, udp |

workers, masters |

Internal cluster communication |

10250 |

tcp |

workers, masters |

Kubernetes kubelet, scheduler and controller manager |

30000 - 32767 |

tcp, udp |

workers, masters |

Kubernetes ingress services |

Master nodes security group allows selected traffic from workers and other master nodes:

| ports | protocols | source | description |

|---|---|---|---|

all |

icmp |

vpc |

ICMP |

all |

ESP (50) |

workers, masters |

IPSec |

500,4500 |

UDP |

workers, masters |

IPSec |

22 |

tcp |

vpc |

SSH |

2379 - 2380 |

tcp |

masters |

etcd |

4789 |

udp |

workers, masters |

Vxlan packets |

6081 |

udp |

workers, masters |

GENEVE packets |

6443 |

tcp |

vpc |

api access |

6641 - 6642 |

tcp |

workers, masters |

OVN packets |

9000 - 9999 |

tcp, udp |

workers, masters |

Internal cluster communication |

10250 |

tcp |

workers, masters |

Kubernetes kubelet, scheduler and controller manager |

10257 |

tcp |

workers, masters |

Kubernetes kubelet, scheduler and controller manager |

10259 |

tcp |

workers, masters |

Kubernetes kubelet, scheduler and controller manager |

22623 |

tcp |

vpc |

machine config service |

30000 - 32767 |

tcp, udp |

workers, masters |

Kubernetes ingress services |

what’s next?

This post describes the default infrastucture setup by OpenShift installer. It is possible to apply customizations such as CIDR ranges, machine instance types, etc. Red Hat’s documentation has a good section on this here.

It is also possible to perform an OpenShift IPI installation into an existing VPC.

Last but not least, OpenShift will be available as a managed service on AWS soon! Here’s the announcement for Red Hat OpenShift Service on AWS.